For years, campaigns have used AI to make robocalls and generate speech captions. But now, some are using it to create a new reality.

“In some sense, that’s been a part of American elections since the beginning,” political science professor Michael Ensley said. “I think what makes, potentially, artificial intelligence sort of different, is its combination and use with social media and the fact that it can be spread sort of quickly. In many ways, it’s about the degree and reach of it and how we get out news.”

With further advancements in technology, AI can be used to create realistic images and ads. For example, recently, Elon Musk posted an AI-generated image of Kamala Harris wearing a communist uniform to express a political opinion about her.

Although the image was fake, it still had an impact. Millions of users on the social platform X saw the image; hundreds of thousands interacted with the post.

As eligible voters prepare to exercise their civic duty this November, some may wonder how to stay educated and avoid misinformation before election day.

Ensley said there are existing risks with AI and its misuse in campaigns.

“It is here…I think it’s more useful to think about how we leverage it, how we use it and how we prevent or minimize those things we worry about,” he said.

Many news sources have embraced the culture of social media by sharing news content online. However, with 68% of the U.S. using social media, there is a fair chance for error due to the simple fact that not everyone has the same amount of media literacy.

“The messages can come to us that are true that we don’t know are true, or that are fiction that we think might be true, or just ‘mis’ and ‘dis’ information where the intent is to mislead,” said Thor Wasbotten, a professor in the School of Media and Journalism.

There are methods and tools that the population can use to tell when images and texts are generated by AI or are inherently false, but not every person on the internet may have access to these tools.

“What is potentially more worrying,” Ensley said, “is that if so much information is being produced that is misleading, false, that it may lead people to discount all information regardless of the source. They’ll become less trusting overall.”

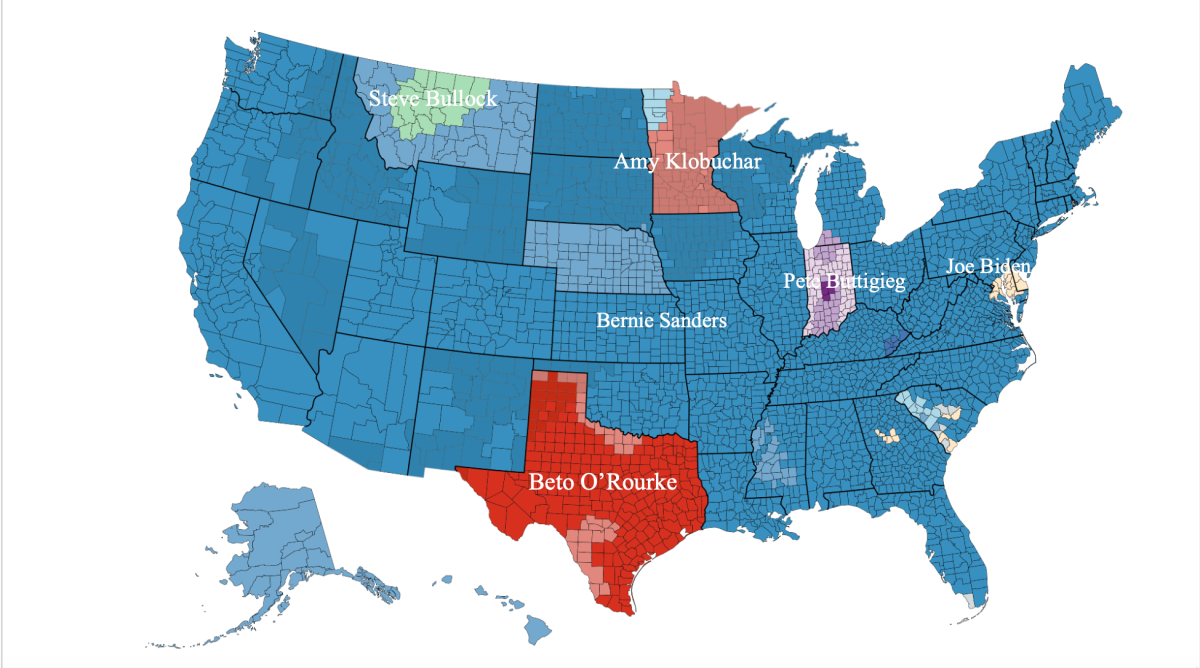

In terms of media consumption, viewers may have a natural urge to gravitate towards news or biases that confirm pre-existing beliefs. This behavior, referred to as the “echo chamber effect,” often causes individuals to consume only one side of the information when, in reality, there is more to be observed.

“I underappreciated the effect of social media on sort of the environment that we’re in,” Ensley said. “I think that has had some pretty negative consequences, not because of misinformation. It encourages instant reactions and discourages deliberation. Everything is almost instantaneous.”

Each interaction with online content — whether it is a like, share or comment — trains a device or application how to target an individual.

Wasbotten said when users create an echo chamber for themselves, the algorithms will continue to push that message toward them.

“If we can continue to feed it, we might use AI to do that because we can feed you many, many more messages than would otherwise be coming at you. We can do it faster now,” he said.

AI and misinformation are crafted carefully and can make it hard to take back a narrative that has already been exposed, even with maximum defense.

“The people that want to influence your decisions or reinforce what you might already think, and do it in a nefarious way or an insincere way, they know there’s mechanisms out there to do that at an amplified rate,” Wasbotten said.

AI and misinformation have changed the political climate and will continue to do so as long as the technology has impact. It is, however, possible for individuals to prevent these advancements from changing their attitudes about politics and how to go about elections.

Wasbotten and Ensley both advised people research and then compare information from multiple sources to verify facts. There are sources on dozens of platforms, but it is important to understand where the facts come from.

Going to candidates directly for information is also a viable option. There is plenty to learn directly from the campaigns and how they promote themselves, Ensley said.

“In the end, it’s not a losing battle,” Wasbotten said. “We have to find solutions through the organizations that build this technology, through the government agencies that might be able to appropriately regulate the technology, and then of course the importance of the users to understand what is happening.”

Ari Collins is a reporter. Contact her at [email protected].